Brain-computer interface (BCI) technologies are no longer hypothetical, yet there are fundamental aspects of the technology that remain unaddressed by both ethicists and policy-makers. Two new papers address these issues by outlining the outstanding ethical issues, offering guidance for addressing those issues, and offering particular insight into the field of BCI tech for cognitive enhancement.

“BCI technologies are devices that detect brain signals conveying intention and translates them into executable output by a computer,” says Allen Coin, a graduate student at North Carolina State University and lead author of both papers. “BCI technologies can also provide feedback to the user, reflecting whether he or she attained a goal or completed a desired action.”

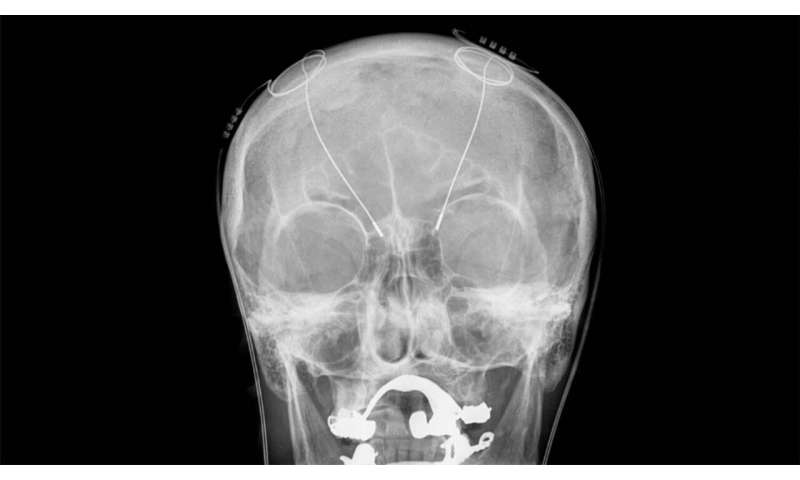

“BCI devices can be non-invasive devices that users wear, or they can be invasive devices, which are surgically implanted,” says Veljko Dubljević, an assistant professor in NC State’s Science, Technology & Society program and co-author of both papers. “The invasive devices are more efficient, since they can read signals directly from the brain. However, they also raise more ethical concerns.

“For example, invasive BCI technologies carry more associated risks such as surgery, infection, and glial scarring—and invasive BCI devices would be more difficult to replace as technology improves.”

Many BCI devices, such as cochlear implants, are already in use. And this field of technology has garnered increased attention due to a company called Neuralink, which is focused on building what it calls a brain-machine interface.

“Neuralink highlights the immediacy of these ethical questions,” Dubljević says. “We can’t put the questions off any more. We need to address them now.”

And Dubljević would know.

He, Coin and NC State undergraduate Megan Mulder recently published a comprehensive review of the research literature that addresses ethical considerations of BCI. A previous review had been done as recently as 2016, but almost as much research had been done on the ethics of BCI since 2016 as had been done before 2016.

One of the key take-aways from the analysis is that there are two areas that ethicists have not adequately addressed and that should be prioritized for future work: physical effects of BCI and psychological effects.

“On the physical side, there’s been little analysis by ethicists of the potential long-term health effects of BCI on users,” Coin says. “There’s also been inadequate discussion of ethical considerations related to the use of animals in testing invasive BCI technologies. These are, after all, surgical operations.”

On the psychological side, researchers found reason for concern—but a lack of ethical analysis. For example, one study evaluated the use of invasive BCI to give patients with epilepsy advanced warning of seizures. While some people adjusted well to the technology, others reported experiencing radical psychological distress.

“This is an issue that must be addressed,” Dubljević says. “We also need to assess questions on the extent to which users feel the BCI is an empowering extension of their minds, as opposed to challenging their sense of self. These are big questions, not afterthoughts.”

“Another thing our review really drove home is that the ethical analysis of BCI has been done by ethicists who are writing almost exclusively for other ethicists,” Coin says. “As a result, little of the work is framed in a way that is directly relevant—or even accessible—to policy makers and the public.”

The researchers also laid out a constructive framework for guiding future research on the ethics of BCI. At its core is one overarching question: “What would be the most legitimate public policies for regulating the development and use of various BCI neurotechnologies by healthy adults in a reasonably just, though not perfect, democratic society?”

“That question is long, technical, and steeped in scholarship of ethics and policy of new technology, but it’s critical to guiding the development of BCIs,” Dubljević says.

In a separate paper, Coin and Dubljević explored issues related to authenticity and machine-augmented intelligence. In this context, authenticity refers to the extent to which an individual feels that their abilities and accomplishments are their own, even if those abilities are augmented by BCI technologies, or their accomplishments were made with the assistance of BCI technologies.

“And machine-augmented intelligence refers to BCI technologies that enhance cognition—which are not yet on the market,” Dubljević says. “However, they are clearly a goal of BCI developers, including Neuralink.”

In this paper, the researchers were focused on the fact that there have been very few concerns about authenticity in the context of cognitive enhancement BCI technologies, despite the fact that these concerns have come up repeatedly in regard to other cognitive enhancement tools—such as drugs that can improve concentration.

In other words, if ethicists care about whether people on “smart drugs” have “earned” their test scores, why don’t they have similar concerns about BCIs?

The answer appears to be twofold.

First of all, the majority of the proposed cognitive enhancement BCI devices are therapeutic in nature.

“When a patient receives a cochlear implant, that’s a BCI that helps them hear,” Coin says. “Nobody questions whether that patient’s hearing is authentic. Similarly, cognitive enhancement BCI devices are often presented as similarly therapeutic tools, helping patients overcome a challenge.

“However, we are now hearing more about the potential for BCI devices to enhance cognition in ways that go beyond therapeutic applications, yet there is still limited debate about their authenticity,” Coin says. “We think that this is because these BCI technologies are generally envisioned as being implants, which means the device would effectively be a permanent—or semi-permanent—change, or even an extension, to a person’s mind. These aren’t drugs that wear off. They’re there to stay.”

Source: Read Full Article